diff --git a/.gitignore b/.gitignore

index 1ea79bb..c998d8c 100644

--- a/.gitignore

+++ b/.gitignore

@@ -170,5 +170,5 @@ cython_debug/

# Demo file

flagged/

*.sh

-!exec_filter.sh

+!sanitize.sh

logs/

diff --git a/README-SC2INST.md b/README-SC2INST.md

new file mode 100644

index 0000000..b5a9078

--- /dev/null

+++ b/README-SC2INST.md

@@ -0,0 +1,328 @@

+# StarCoder2-Instruct: Fully Transparent and Permissive Self-Alignment for Code Generation

+

+

+ ⭐️ About

+ | 🚀 Quick start

+ | 📚 Data generation

+ | 🧑💻 Training

+ | 📊 Evaluation

+ | ⚠️ Limitations

+

+

+

+

+

+

+## About

+

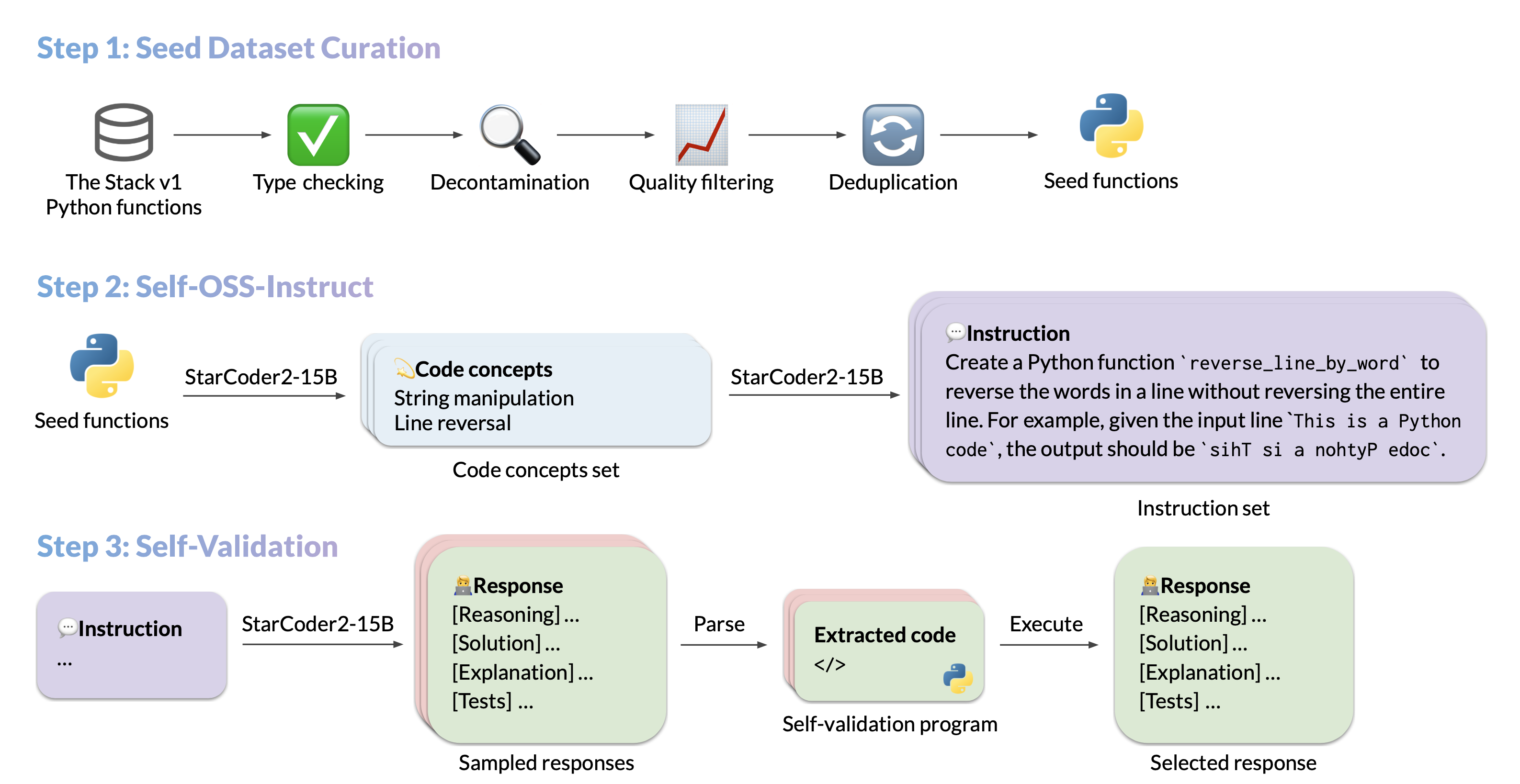

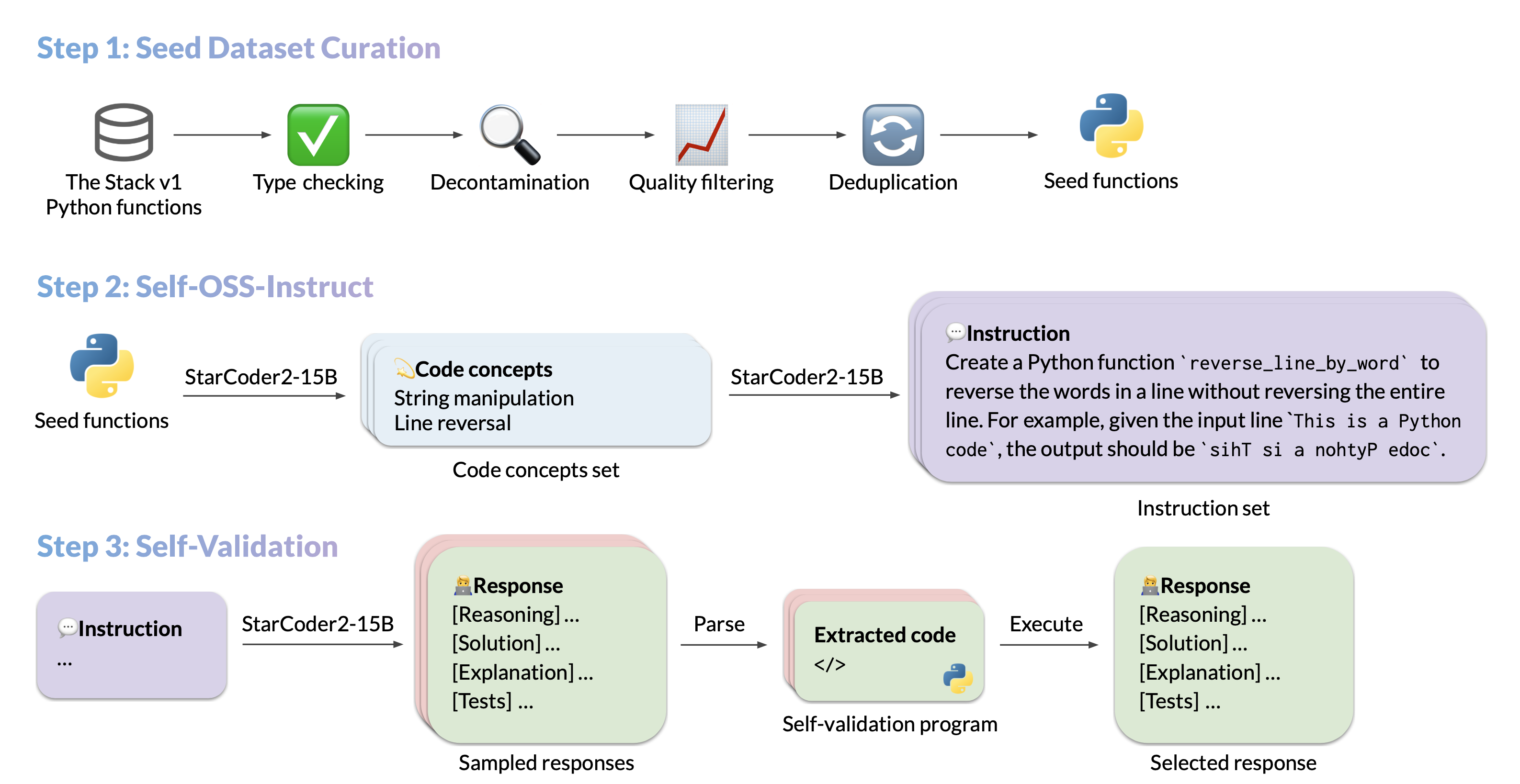

+We introduce StarCoder2-15B-Instruct-v0.1, the very first entirely self-aligned code Large Language Model (LLM) trained with a fully permissive and transparent pipeline. Our open-source pipeline uses StarCoder2-15B to generate thousands of instruction-response pairs, which are then used to fine-tune StarCoder-15B itself without any human annotations or distilled data from huge and proprietary LLMs.

+

+- **Model:** [bigcode/starcoder2-15b-instruct-v0.1](https://huggingface.co/bigcode/starcoder2-instruct-15b-v0.1)

+- **Code:** [bigcode-project/starcoder2-self-align](https://github.com/bigcode-project/starcoder2-self-align)

+- **Dataset:** [bigcode/self-oss-instruct-sc2-exec-filter-50k](https://huggingface.co/datasets/bigcode/self-oss-instruct-sc2-exec-filter-50k/)

+- **Authors:**

+[Yuxiang Wei](https://yuxiang.cs.illinois.edu),

+[Federico Cassano](https://federico.codes/),

+[Jiawei Liu](https://jw-liu.xyz),

+[Yifeng Ding](https://yifeng-ding.com),

+[Naman Jain](https://naman-ntc.github.io),

+[Harm de Vries](https://www.harmdevries.com),

+[Leandro von Werra](https://twitter.com/lvwerra),

+[Arjun Guha](https://www.khoury.northeastern.edu/home/arjunguha/main/home/),

+[Lingming Zhang](https://lingming.cs.illinois.edu).

+

+

+

+## Quick start

+

+Here is an example to get started with StarCoder2-15B-Instruct-v0.1 using the [transformers](https://huggingface.co/docs/transformers/index) library:

+

+```python

+import transformers

+import torch

+

+pipeline = transformers.pipeline(

+ model="bigcode/starcoder2-15b-instruct-v0.1",

+ task="text-generation",

+ torch_dtype=torch.bfloat16,

+ device_map="auto",

+)

+

+def respond(instruction: str, response_prefix: str) -> str:

+ messages = [{"role": "user", "content": instruction}]

+ prompt = pipeline.tokenizer.apply_chat_template(messages, tokenize=False)

+ prompt += response_prefix

+

+ teminators = [

+ pipeline.tokenizer.eos_token_id,

+ pipeline.tokenizer.convert_tokens_to_ids("###"),

+ ]

+

+ result = pipeline(

+ prompt,

+ max_length=256,

+ num_return_sequences=1,

+ do_sample=False,

+ eos_token_id=teminators,

+ pad_token_id=pipeline.tokenizer.eos_token_id,

+ truncation=True,

+ )

+ response = response_prefix + result[0]["generated_text"][len(prompt) :].split("###")[0].rstrip()

+ return response

+

+

+instruction = "Write a quicksort function in Python with type hints and a 'less_than' parameter for custom sorting criteria."

+response_prefix = ""

+

+print(respond(instruction, response_prefix))

+```

+

+## Data generation pipeline

+

+> Run `pip install -e .` first to install the package locally. Check [seed_gathering](seed_gathering/) for details on how we collected the seeds.

+

+By default, we use in-memory vLLM engine for data generation, but we also provide an option to use vLLM's [OpenAI compatible server](https://docs.vllm.ai/en/latest/serving/openai_compatible_server.html) for data generation.

+

+Set `CUDA_VISIBLE_DEVICES=...` to specify the GPU devices to use for the vLLM engine.

+

+To maximize data generation efficiency, we recommend invoking the script multiple times with different `seed_code_start_index` and `max_new_data` values, each with an vLLM engine running on a separate GPU set. For example, for a 100k seed dataset on a 2-GPU machine, you can have 2 processes each generating 50k samples by setting `CUDA_VISIBLE_DEVICES=0 --seed_code_start_index 0 --max_new_data 50000` and `CUDA_VISIBLE_DEVICES=1 --seed_code_start_index 50000 --max_new_data 50000`.

+

+

+

+Click to see how to run with vLLM's OpenAI compatible API

+

+To do so, make sure the vLLM server is running, and the associated `openai` environment variables are set.

+

+For example, you can start an vLLM server with `docker`:

+

+```shell

+docker run --gpus '"device=0"' \

+ -v $HF_HOME:/root/.cache/huggingface \

+ -p 10000:8000 \

+ --ipc=host \

+ vllm/vllm-openai:v0.3.3 \

+ --model bigcode/starcoder2-15b \

+ --tensor-parallel-size 1 --dtype bfloat16

+```

+

+And then set the environment variables as follows:

+

+```shell

+export OPENAI_API_KEY="EMPTY"

+export OPENAI_BASE_URL="http://localhost:10000/v1/"

+```

+

+You will also need to set `--use_vllm_server True` in the following commands.

+

+

+

+

+

+Snippet to concepts generation

+

+```shell

+MODEL=bigcode/starcoder2-15b

+MAX_NEW_DATA=1000000

+python src/star_align/self_ossinstruct.py \

+ --use_vllm_server False \

+ --instruct_mode "S->C" \

+ --seed_data_files /path/to/seeds.jsonl \

+ --max_new_data $MAX_NEW_DATA \

+ --tag concept_gen \

+ --temperature 0.7 \

+ --seed_code_start_index 0 \

+ --model $MODEL \

+ --num_fewshots 8 \

+ --num_batched_requests 2000 \

+ --num_sample_per_request 1

+```

+

+

+

+

+

+Concepts to instruction generation

+

+```shell

+MODEL=bigcode/starcoder2-15b

+MAX_NEW_DATA=1000000

+python src/star_align/self_ossinstruct.py \

+ --instruct_mode "C->I" \

+ --seed_data_files /path/to/concepts.jsonl \

+ --max_new_data $MAX_NEW_DATA \

+ --tag instruction_gen \

+ --temperature 0.7 \

+ --seed_code_start_index 0 \

+ --model $MODEL \

+ --num_fewshots 8 \

+ --num_sample_per_request 1 \

+ --num_batched_request 2000

+```

+

+

+

+

+

+Instruction to response (with self-validation code) generation

+

+```shell

+MODEL=bigcode/starcoder2-15b

+MAX_NEW_DATA=1000000

+python src/star_align/self_ossinstruct.py \

+ --instruct_mode "I->R" \

+ --seed_data_files path/to/instructions.jsonl \

+ --max_new_data $MAX_NEW_DATA \

+ --tag response_gen \

+ --seed_code_start_index 0 \

+ --model $MODEL \

+ --num_fewshots 1 \

+ --num_batched_request 500 \

+ --num_sample_per_request 10 \

+ --temperature 0.7

+```

+

+

+

+

+

+Execution filter

+

+> **Warning:** Though we implemented reliability guards, it is highly recommended to run execution in a sandbox environment we provided.

+

+

+To use the Docker container for executing code, you will first need to `git submodule update --init --recursive` to clone the server, then run:

+

+```shell

+pushd ./src/star_align/code_exec_server

+./pull_and_run.sh

+popd

+python src/star_align/execution_filter.py \

+ --response_paths /path/to/response.jsonl \

+ --result_path /path/to/filtered.jsonl \

+ --max_batched_tasks 10000 \

+ --container_server http://127.0.0.1:8000

+```

+

+Execution filter will produce a flattened list of JSONL entries with a `pass` field indicating whether the execution passed or not. **It also incrementally dumps the results and can load a cached partial data file.** You can recover an execution with:

+

+```shell

+python src/star_align/execution_filter.py \

+ --response_paths /path/to/response.jsonl* \

+ --cache_paths /path/to/filtered.jsonl* \

+ --result_path /path/to/filtered-1.jsonl \

+ --max_batched_tasks 10000 \

+ --container_server http://127.0.0.1:8000

+```

+

+Note that sometimes execution can lead to significant slowdowns due to excessive resource consumption. To alleviate this, you can limit the docker's cpu usage (e.g., `docker run --cpuset-cpus="0-31"`). You can also do:

+

+```shell

+# For example, you can set the command to be `sudo pkill -f '/tmp/codeexec'`

+export CLEANUP_COMMAND="the command to execute after each batch"

+python src/star_align/execution_filter.py...

+```

+

+Also, the container connection may be lost during execution. In this case, you can just leverage the caching mechanism described above to re-run the script.

+

+

+

+

+

+Data sanitization and selection

+

+```shell

+# Uncomment to do decontamination

+# export MBPP_PATH="/path/to/mbpp.jsonl"

+# export DS1000_PATH="/path/to/ds1000_data"

+# export DECONTAMINATION=1

+./sanitize.sh /path/to/exec-filtered.jsonl /path/to/sanitized.jsonl

+```

+

+

+

+## Training Details

+

+> Run `pip install -e .` first to install the package locally. And install [Flash Attention](https://github.com/Dao-AILab/flash-attention) to speed up the training.

+

+### Hyperparameters

+

+- **Optimizer:** Adafactor

+- **Learning rate:** 1e-5

+- **Epoch:** 4

+- **Batch size:** 64

+- **Warmup ratio:** 0.05

+- **Scheduler:** Linear

+- **Sequence length:** 1280

+- **Dropout**: Not applied

+

+### Hardware

+

+1 x NVIDIA A100 80GB. Yes, you just need one A100 to finetune StarCoder2-15B!

+

+### Script

+

+The following script finetunes StarCoder2-15B-Instruct-v0.1 from the base StarCoder2-15B model. `/path/to/dataset.jsonl` is the JSONL format of the [50k dataset](https://huggingface.co/datasets/bigcode/self-oss-instruct-sc2-exec-filter-50k) we generated. You can dump the dataset to JSONL to fit the training script.

+

+

+

+Click to see the training script

+

+NOTE: StarCoder2-15B sets dropout values to 0.1 by default. We did not apply dropout in finetuning and thus set the them to 0.0.

+

+```shell

+MODEL_KEY=bigcode/starcoder2-15b

+LR=1e-5

+EPOCH=4

+SEQ_LEN=1280

+WARMUP_RATIO=0.05

+OUTPUT_DIR=/path/to/output_model

+DATASET_FILE=/path/to/50k-dataset.jsonl

+accelerate launch -m star_align.train \

+ --model_key $MODEL_KEY \

+ --model_name_or_path $MODEL_KEY \

+ --use_flash_attention True \

+ --datafile_paths $DATASET_FILE \

+ --output_dir $OUTPUT_DIR \

+ --bf16 True \

+ --num_train_epochs $EPOCH \

+ --max_training_seq_length $SEQ_LEN \

+ --pad_to_max_length False \

+ --per_device_train_batch_size 1 \

+ --gradient_accumulation_steps 64 \

+ --group_by_length False \

+ --ddp_find_unused_parameters False \

+ --logging_steps 1 \

+ --log_level info \

+ --optim adafactor \

+ --max_grad_norm -1 \

+ --warmup_ratio $WARMUP_RATIO \

+ --learning_rate $LR \

+ --lr_scheduler_type linear \

+ --attention_dropout 0.0 \

+ --residual_dropout 0.0 \

+ --embedding_dropout 0.0

+```

+

+

+

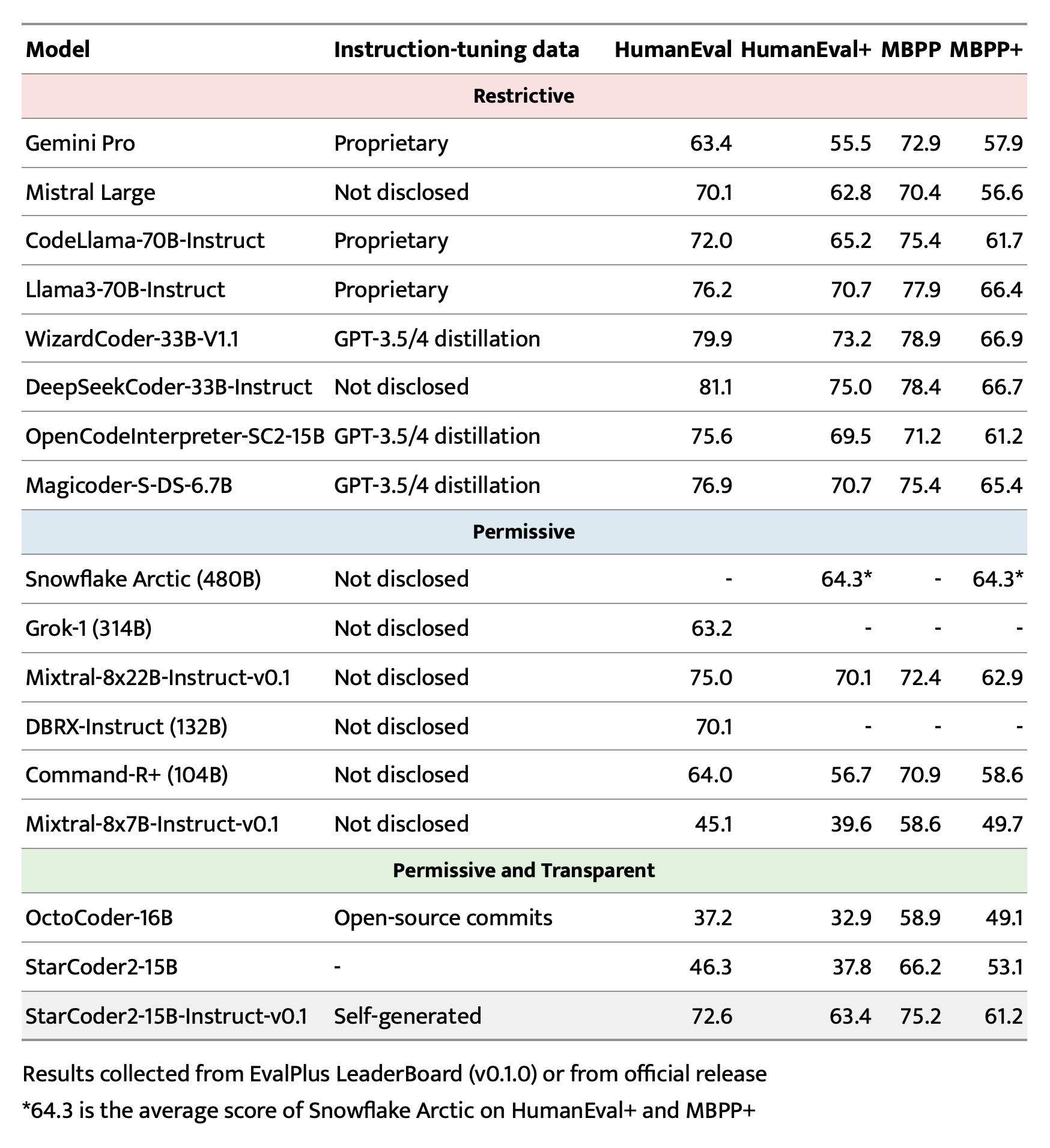

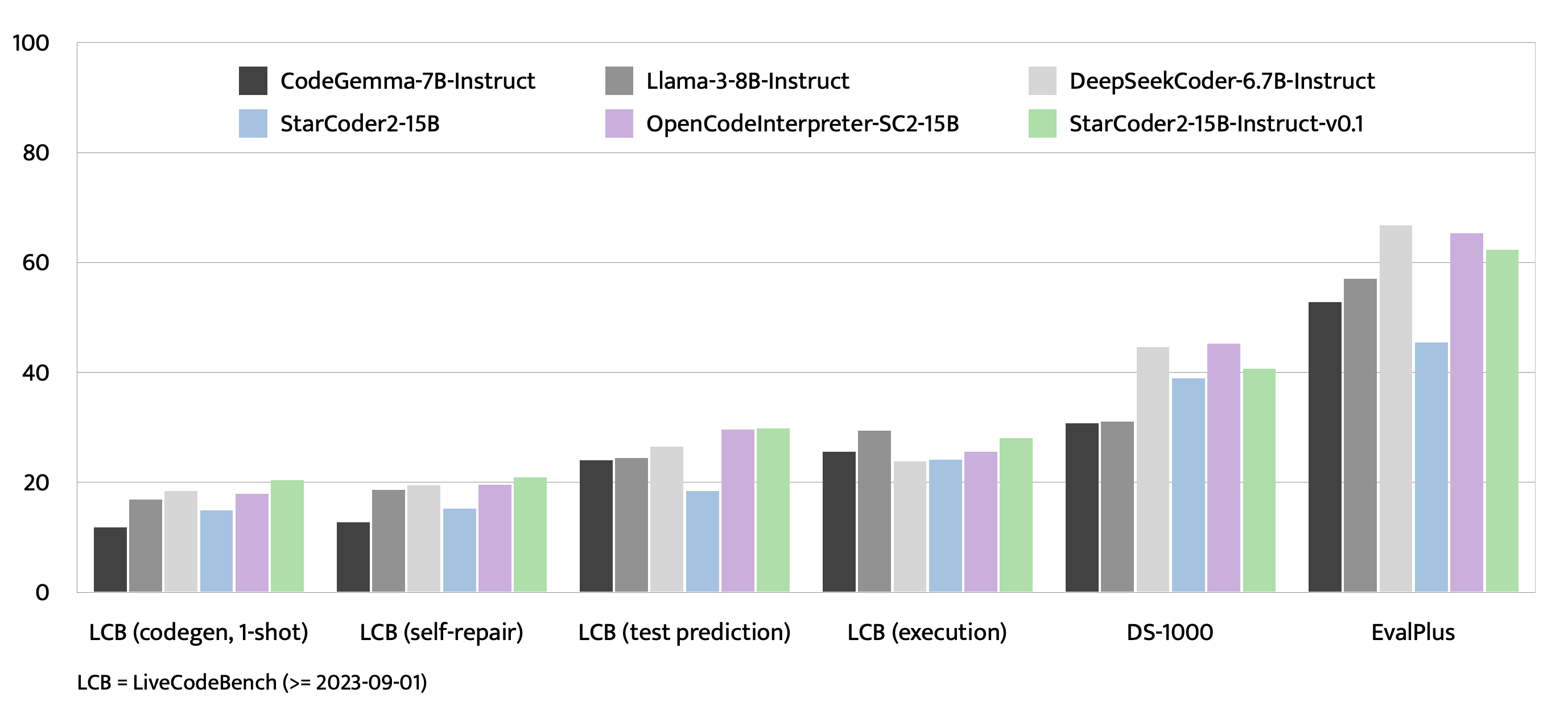

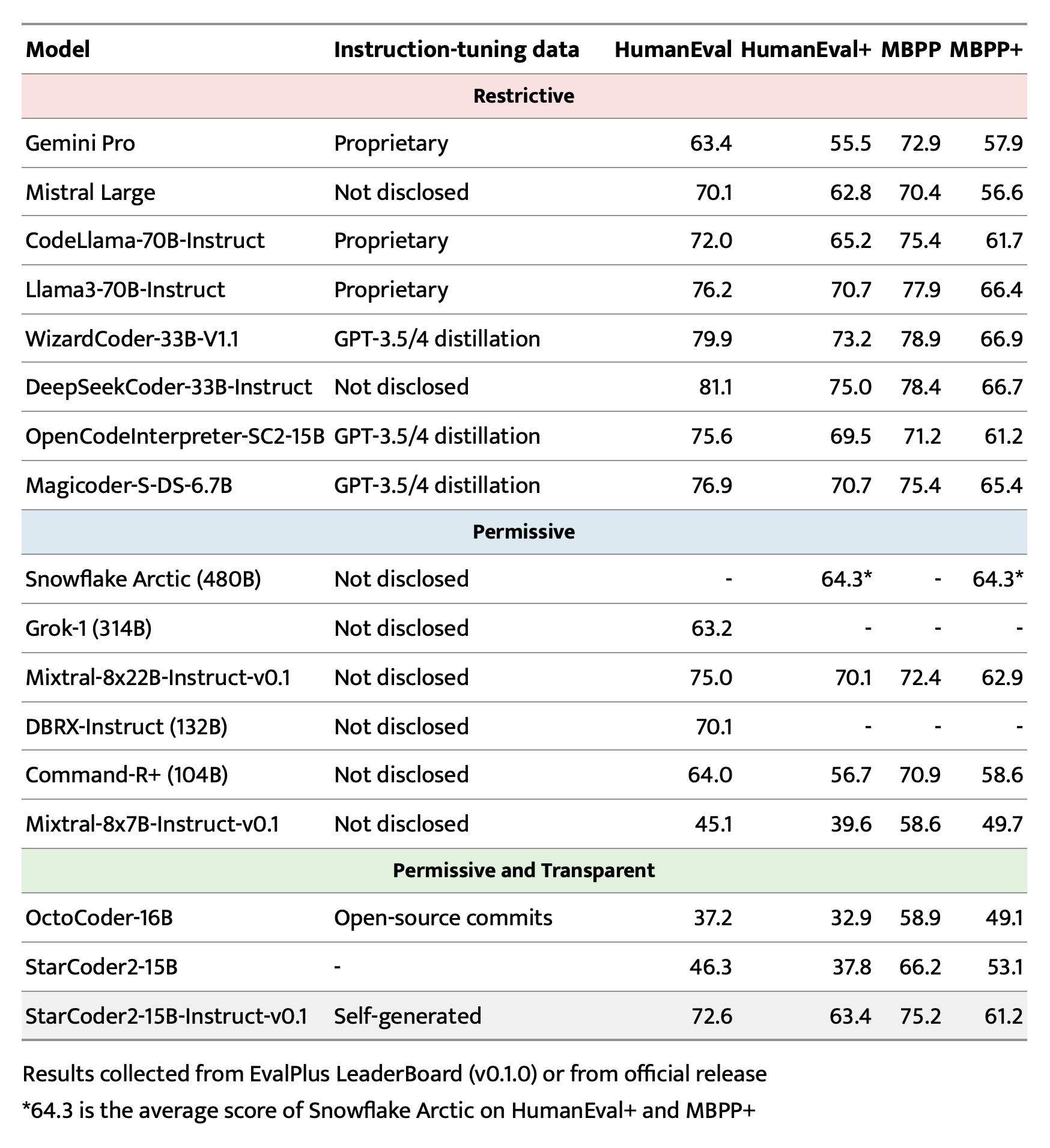

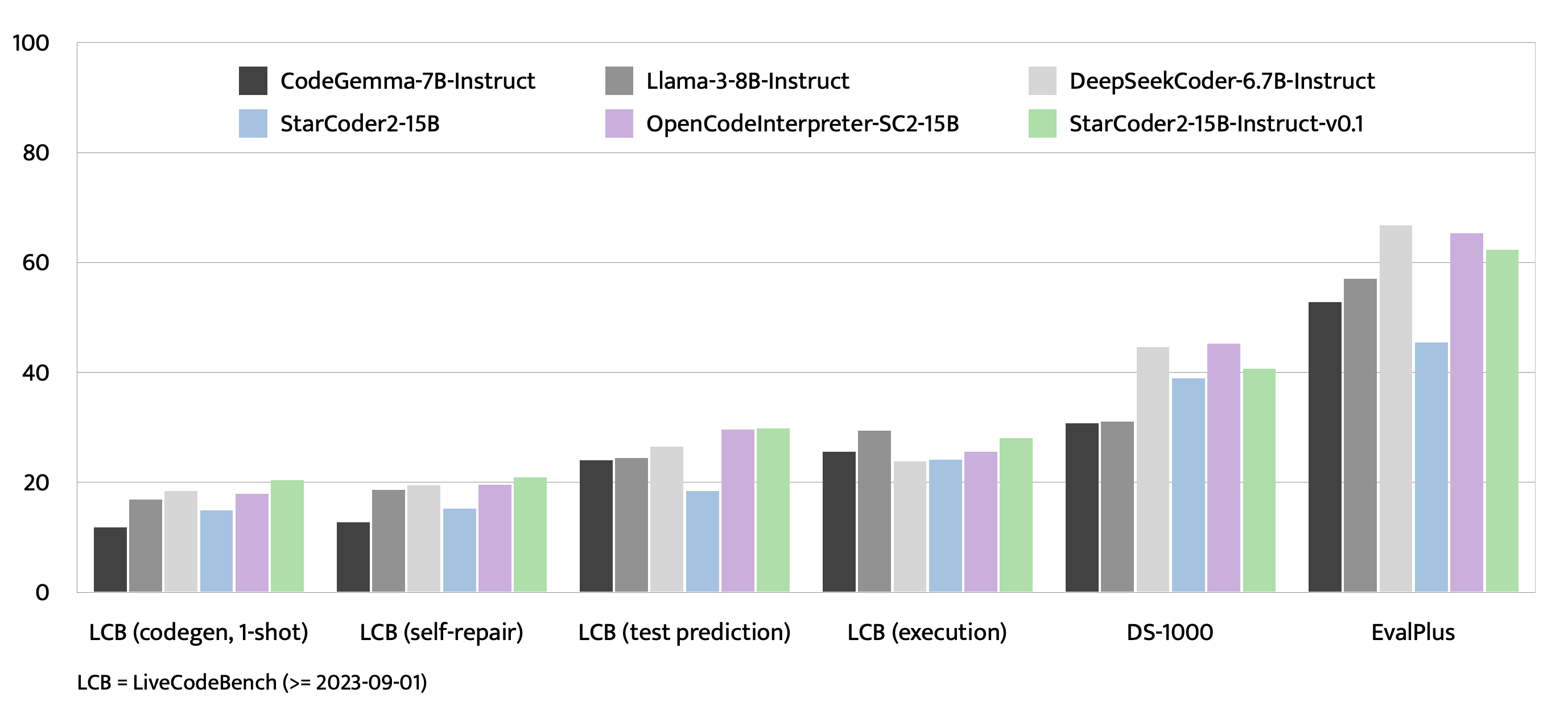

+## Evaluation on EvalPlus, LiveCodeBench, and DS-1000

+

+> Check [evaluation](evaluation/) for more details.

+

+

+

+

+

+## Bias, Risks, and Limitations

+

+StarCoder2-15B-Instruct-v0.1 is primarily finetuned for Python code generation tasks that can be verified through execution, which may lead to certain biases and limitations. For example, the model might not adhere strictly to instructions that dictate the output format. In these situations, it's beneficial to provide a **response prefix** or a **one-shot example** to steer the model’s output. Additionally, the model may have limitations with other programming languages and out-of-domain coding tasks.

+

+The model also inherits the bias, risks, and limitations from its base StarCoder2-15B model. For more information, please refer to the [StarCoder2-15B model card](https://huggingface.co/bigcode/starcoder2-15b).

diff --git a/README.md b/README.md

index aa8d8f0..d107c10 100644

--- a/README.md

+++ b/README.md

@@ -1,275 +1,61 @@

-# StarCoder2-Instruct: Fully Transparent and Permissive Self-Alignment for Code Generation

+# SelfCodeAlign: Self-Alignment for Code Generation

- ⭐️ About

- | 🚀 Quick start

- | 📚 Data generation

- | 🧑💻 Training

- | 📊 Evaluation

- | ⚠️ Limitations

+  +

+  +

+

+

+

+

+

-

-

-

+

+ 🧐 About

+ | ⭐️ StarCoder2-Instruct

+ | 📝 Citation

+

+

+

+

+

+

## About

-We introduce StarCoder2-15B-Instruct-v0.1, the very first entirely self-aligned code Large Language Model (LLM) trained with a fully permissive and transparent pipeline. Our open-source pipeline uses StarCoder2-15B to generate thousands of instruction-response pairs, which are then used to fine-tune StarCoder-15B itself without any human annotations or distilled data from huge and proprietary LLMs.

+**SelfCodeAlign** is the first fully open and transparent pipeline that enhances a code language model without relying on human annotations or distilled data from large, proprietary models. This approach led to the creation of [StarCoder2-Instruct](README-SC2INST.md), a fully transparent, permissively licensed, self-aligned code model that achieves state-of-the-art performance in coding tasks.

-- **Model:** [bigcode/starcoder2-15b-instruct-v0.1](https://huggingface.co/bigcode/starcoder2-instruct-15b-v0.1)

-- **Code:** [bigcode-project/starcoder2-self-align](https://github.com/bigcode-project/starcoder2-self-align)

-- **Dataset:** [bigcode/self-oss-instruct-sc2-exec-filter-50k](https://huggingface.co/datasets/bigcode/self-oss-instruct-sc2-exec-filter-50k/)

-- **Authors:**

+**Authors:**

[Yuxiang Wei](https://yuxiang.cs.illinois.edu),

[Federico Cassano](https://federico.codes/),

[Jiawei Liu](https://jw-liu.xyz),

[Yifeng Ding](https://yifeng-ding.com),

[Naman Jain](https://naman-ntc.github.io),

+[Zachary Mueller](https://muellerzr.github.io),

[Harm de Vries](https://www.harmdevries.com),

[Leandro von Werra](https://twitter.com/lvwerra),

-[Arjun Guha](https://www.khoury.northeastern.edu/home/arjunguha/main/home/),

+[Arjun Guha](https://www.khoury.northeastern.edu/home/arjunguha/main/homehttps://www.khoury.northeastern.edu/home/arjunguha/main/home//),

[Lingming Zhang](https://lingming.cs.illinois.edu).

-

-

-## Quick start

-

-Here is an example to get started with StarCoder2-15B-Instruct-v0.1 using the [transformers](https://huggingface.co/docs/transformers/index) library:

-

-```python

-import transformers

-import torch

-

-pipeline = transformers.pipeline(

- model="bigcode/starcoder2-15b-instruct-v0.1",

- task="text-generation",

- torch_dtype=torch.bfloat16,

- device_map="auto",

-)

-

-def respond(instruction: str, response_prefix: str) -> str:

- messages = [{"role": "user", "content": instruction}]

- prompt = pipeline.tokenizer.apply_chat_template(messages, tokenize=False)

- prompt += response_prefix

-

- teminators = [

- pipeline.tokenizer.eos_token_id,

- pipeline.tokenizer.convert_tokens_to_ids("###"),

- ]

-

- result = pipeline(

- prompt,

- max_length=256,

- num_return_sequences=1,

- do_sample=False,

- eos_token_id=teminators,

- pad_token_id=pipeline.tokenizer.eos_token_id,

- truncation=True,

- )

- response = response_prefix + result[0]["generated_text"][len(prompt) :].split("###")[0].rstrip()

- return response

-

-

-instruction = "Write a quicksort function in Python with type hints and a 'less_than' parameter for custom sorting criteria."

-response_prefix = ""

-

-print(respond(instruction, response_prefix))

-```

-

-## Data generation pipeline

-

-> Run `pip install -e .` first to install the package locally. Check [seed_gathering](seed_gathering/) for details on how we collected the seeds.

-

-We used vLLM's [OpenAI compatible server](https://docs.vllm.ai/en/latest/serving/openai_compatible_server.html) for data generation. So, before running the following commands, make sure the vLLM server is running, and the associated `openai` environment variables are set.

-

-For example, you can start an vLLM server with `docker`:

-

-```shell

-docker run --gpus '"device=0"' \

- -v $HF_HOME:/root/.cache/huggingface \

- -p 10000:8000 \

- --ipc=host \

- vllm/vllm-openai:v0.3.3 \

- --model bigcode/starcoder2-15b \

- --tensor-parallel-size 1 --dtype bfloat16

-```

-

-And then set the environment variables as follows:

-

-```shell

-export OPENAI_API_KEY="EMPTY"

-export OPENAI_BASE_URL="http://localhost:10000/v1/"

-```

-

-

-

-Snippet to concepts generation

-

-```shell

-python src/star_align/self_ossinstruct.py \

- --instruct_mode "S->C" \

- --seed_data_files /path/to/seeds.jsonl \

- --max_new_data 50000 \

- --tag concept_gen \

- --temperature 0.7 \

- --seed_code_start_index 0 \

- --model bigcode/starcoder2-15b \

- --num_fewshots 8 \

- --num_batched_requests 32 \

- --num_sample_per_request 1

-```

-

-

-

-

-

-Concepts to instruction generation

-

-```shell

-python src/star_align/self_ossinstruct.py \

- --instruct_mode "C->I" \

- --seed_data_files /path/to/concepts.jsonl \

- --max_new_data 50000 \

- --tag instruction_gen \

- --temperature 0.7 \

- --seed_code_start_index 0 \

- --model bigcode/starcoder2-15b \

- --num_fewshots 8 \

- --num_sample_per_request 1 \

- --num_batched_request 32

-```

-

-

-

-

-

-Instruction to response (with self-validation code) generation

-

-```shell

-python src/star_align/self_ossinstruct.py \

- --instruct_mode "I->R" \

- --seed_data_files path/to/instructions.jsonl \

- --max_new_data 50000 \

- --tag response_gen \

- --seed_code_start_index 0 \

- --model bigcode/starcoder2-15b \

- --num_fewshots 1 \

- --num_batched_request 8 \

- --num_sample_per_request 10 \

- --temperature 0.7

-```

-

-

-

-

-

-Execution filter

-

-> **Warning:** Though we implemented reliability guards, it is highly recommended to run execution in a sandbox environment. The command below doesn't provide sandboxing by default.

-

-```shell

-python src/star_align/execution_filter.py --response_path /path/to/response.jsonl --result_path /path/to/filtered.jsonl

-# The current implementation may cause deadlock.

-# If you encounter deadlock, manually do `ps -ef | grep execution_filter` and kill the stuck process.

-# Note that filtered.jsonl may contain multiple passing samples for the same instruction which needs further selection.

-```

-

-For using the the Docker container for executing code, you will first need to `git submodule update --init --recursive` to clone the server, then run:

-

-```shell

-pushd ./src/star_align/code_exec_server

-./build_and_run.sh

-popd

-python src/star_align/execution_filter.py --response_path /path/to/response.jsonl --result_path /path/to/filtered.jsonl --container_server http://127.0.0.1:8000

-```

-

-

-

-

-

-Data sanitization and selection

-

-```shell

-RAW=1 python src/star_align/sanitize_data.py /path/to/filtered.jsonl /path/to/sanitized.jsonl

-python src/star_align/clean_data.py --data_files /path/to/sanitized.jsonl --output_file /path/to/sanitized.jsonl --diversify_func_names

-SMART=1 python src/star_align/sanitize_data.py /path/to/sanitized.jsonl /path/to/sanitized.jsonl

-```

-

-

-

-## Training Details

-

-> Run `pip install -e .` first to install the package locally. And install [Flash Attention](https://github.com/Dao-AILab/flash-attention) to speed up the training.

-

-### Hyperparameters

+

-- **Optimizer:** Adafactor

-- **Learning rate:** 1e-5

-- **Epoch:** 4

-- **Batch size:** 64

-- **Warmup ratio:** 0.05

-- **Scheduler:** Linear

-- **Sequence length:** 1280

-- **Dropout**: Not applied

+## StarCoder2-Instruct

-### Hardware

-

-1 x NVIDIA A100 80GB. Yes, you just need one A100 to finetune StarCoder2-15B!

+

-### Script

+StarCoder2-Instruct is created with an [earlier version](https://github.com/bigcode-project/selfcodealign/tree/starcoder2-instruct) of SelfCodeAlign. It is the very first entirely self-aligned code Large Language Model (LLM) trained with a fully permissive and transparent pipeline. Our open-source pipeline uses StarCoder2-15B to generate thousands of instruction-response pairs, which are then used to fine-tune StarCoder-15B itself without any human annotations or distilled data from huge and proprietary LLMs.

-The following script finetunes StarCoder2-15B-Instruct-v0.1 from the base StarCoder2-15B model. `/path/to/dataset.jsonl` is the JSONL format of the [50k dataset](https://huggingface.co/datasets/bigcode/self-oss-instruct-sc2-exec-filter-50k) we generated. You can dump the dataset to JSONL to fit the training script.

+- **Model:** [bigcode/starcoder2-15b-instruct-v0.1](https://huggingface.co/bigcode/starcoder2-instruct-15b-v0.1)

+- **Code:** [bigcode-project/starcoder2-self-align](https://github.com/bigcode-project/starcoder2-self-align)

+- **Dataset:** [bigcode/self-oss-instruct-sc2-exec-filter-50k](https://huggingface.co/datasets/bigcode/self-oss-instruct-sc2-exec-filter-50k/)

-

+For more details, check [README-SC2INST.md](README-SC2INST.md).

-Click to see the training script

+## Citation

-```shell

-MODEL_KEY=bigcode/starcoder2-15b

-LR=1e-5

-EPOCH=4

-SEQ_LEN=1280

-WARMUP_RATIO=0.05

-OUTPUT_DIR=/path/to/output_model

-DATASET_FILE=/path/to/50k-dataset.jsonl

-accelerate launch -m star_align.train \

- --model_key $MODEL_KEY \

- --model_name_or_path $MODEL_KEY \

- --use_flash_attention True \

- --datafile_paths $DATASET_FILE \

- --output_dir $OUTPUT_DIR \

- --bf16 True \

- --num_train_epochs $EPOCH \

- --max_training_seq_length $SEQ_LEN \

- --pad_to_max_length False \

- --per_device_train_batch_size 1 \

- --gradient_accumulation_steps 64 \

- --group_by_length False \

- --ddp_find_unused_parameters False \

- --logging_steps 1 \

- --log_level info \

- --optim adafactor \

- --max_grad_norm -1 \

- --warmup_ratio $WARMUP_RATIO \

- --learning_rate $LR \

- --lr_scheduler_type linear

+```bibtex

+@article{wei2024selfcodealign,

+ title={SelfCodeAlign: Self-Alignment for Code Generation},

+ author={Yuxiang Wei and Federico Cassano and Jiawei Liu and Yifeng Ding and Naman Jain and Zachary Mueller and Harm de Vries and Leandro von Werra and Arjun Guha and Lingming Zhang},

+ year={2024},

+ journal={arXiv preprint arXiv:2410.24198}

+}

```

-

-

-

-## Evaluation on EvalPlus, LiveCodeBench, and DS-1000

-

-> Check [evaluation](evaluation/) for more details.

-

-

-

-

-

-## Bias, Risks, and Limitations

-

-StarCoder2-15B-Instruct-v0.1 is primarily finetuned for Python code generation tasks that can be verified through execution, which may lead to certain biases and limitations. For example, the model might not adhere strictly to instructions that dictate the output format. In these situations, it's beneficial to provide a **response prefix** or a **one-shot example** to steer the model’s output. Additionally, the model may have limitations with other programming languages and out-of-domain coding tasks.

-

-The model also inherits the bias, risks, and limitations from its base StarCoder2-15B model. For more information, please refer to the [StarCoder2-15B model card](https://huggingface.co/bigcode/starcoder2-15b).

diff --git a/evaluation/README.md b/evaluation/README.md

index 2ce2ef4..841342f 100644

--- a/evaluation/README.md

+++ b/evaluation/README.md

@@ -1,9 +1,29 @@

-# Reproduce the experiments

+# Evaluation

> [!IMPORTANT]

> **General requirements**

>

> Before you start, make sure you have cloned the repository and you are in the **root directory of the project**. Make sure you installed the required packages with `pip install -e .`. Different package versions may impact the reproducibility of the results.

+

+## Running EvalPlus with vLLM

+

+We implemented batched inference in [evaluation/text2code_vllm.py] using [vLLM](https://docs.vllm.ai/en/latest/). This speed up the evaluation significantly: **a greedy decoding run can be finished within 20 seconds**. Here is the command:

+

+```bash

+MODEL=/path/to/your/model

+DATASET=humaneval # or mbpp

+SAVE_PATH=evalplus-$(basename $MODEL)-$DATASET.jsonl

+CUDA_VISIBLE_DEVICES=0 python -m evaluation.text2code_vllm \

+ --model_key $MODEL \

+ --dataset $DATASET \

+ --save_path $SAVE_PATH

+

+python -m evalplus.evaluate --dataset $DATASET --samples $SAVE_PATH

+```

+

+## Reproduce StarCoder2-Instruct

+

+> [!NOTE]

>

> We obtained the results with the subsequent hardware and environment:

>

@@ -12,13 +32,13 @@

>

> In case you face issues, we provide the raw outputs we generated in the [evalplus_results](evalplus_results) directory.

-## Reproduce HumanEval(+) and MBPP(+)

+### Reproduce HumanEval(+) and MBPP(+)

We pack multiple problems into one batch to speed up the inference. A different batch size may lead to slightly worse/better results due to the floating point round off resulted from the underlying [cuBLAS](https://docs.nvidia.com/cuda/cublas/index.html) optimization.

Make sure you set `CUDA_VISIBLE_DEVICES` to the GPU you want to use and `cd`ed to the root directory of the repo. We assume you use device 0 in the following commands.

-### HumanEval(+)

+#### HumanEval(+)

```bash

MODEL_KEY=bigcode/starcoder2-15b-instruct-v0.1

@@ -46,7 +66,7 @@ python -m evalplus.evaluate --dataset $DATASET --samples $SAVE_PATH

# pass@1: 0.634

```

-### MBPP(+)

+#### MBPP(+)

```bash

MODEL_KEY=bigcode/starcoder2-15b-instruct-v0.1

@@ -71,4 +91,4 @@ python -m evalplus.evaluate --dataset $DATASET --samples $SAVE_PATH

# pass@1: 0.642

# mbpp+ (base + extra tests)

# pass@1: 0.526

-```

\ No newline at end of file

+```

diff --git a/evaluation/ds_1000.py b/evaluation/ds_1000.py

new file mode 100644

index 0000000..9225313

--- /dev/null

+++ b/evaluation/ds_1000.py

@@ -0,0 +1,264 @@

+import os

+from dataclasses import dataclass, field

+from pathlib import Path

+from typing import Callable, Literal, cast

+from transformers import AutoTokenizer

+from ds1000 import DS1000Dataset, DS1000Problem

+from tqdm.auto import tqdm

+from transformers import HfArgumentParser

+

+from star_align.llm_wrapper import (

+ GenerationConfig,

+ ModelContext,

+ create_infilling_prompt,

+ get_model_context,

+)

+from star_align.utils import infer_prompt_template

+

+from vllm import LLM, SamplingParams

+

+PROMPT = cast(str, None)

+

+

+@dataclass

+class Args:

+ dataset_path: str

+ model_key: str

+ model_name_or_path: str

+ mode: Literal["Insertion", "Completion"]

+ output_dir: str

+

+ temperature: float = field(default=0.2)

+ top_p: float = field(default=0.95)

+ max_length: int = field(default=1024)

+ n_samples_per_batch: int = field(default=5)

+ n_batches: int = field(default=8)

+

+ def to_generation_config(self) -> GenerationConfig:

+ return GenerationConfig(

+ # Use max_length to control

+ max_new_tokens=9999999999999,

+ top_p=self.top_p,

+ temperature=self.temperature,

+ max_length=self.max_length,

+ )

+

+

+def postprocess(text: str) -> str:

+ return text.split("```")[0]

+

+

+def create_prompt(args: Args, tokenizer: AutoTokenizer, problem: DS1000Problem) -> str:

+ prompt = problem["prompt"]

+ if args.mode == "Insertion":

+ prompt = preprocess_insertion_prompt(prompt)

+ assert prompt.count("[insert]") == 1

+ prefix, suffix = prompt.split("[insert]")

+ prompt = create_infilling_prompt(

+ model_key=args.model_key,

+ prefix=prefix,

+ suffix=suffix,

+ tokenizer=tokenizer,

+ )

+ else:

+ assert args.mode == "Completion"

+ instruction, response_prefix = preprocess_completion_prompt(problem["prompt"])

+ prompt = PROMPT.format(

+ instruction=instruction,

+ response=response_prefix,

+ )

+ return prompt

+

+

+def generate(

+ args: Args,

+ # model_context: ModelContext,

+ engine: LLM,

+ problem: DS1000Problem,

+):

+ lib: str = problem["lib"]

+ model_key = args.model_key.replace("/", "-")

+ problem_id: str = f"q{problem.problem_id}"

+ path = Path(args.output_dir) / model_key / lib / args.mode / problem_id

+ finishing_signal = path / "FINISHED"

+ if finishing_signal.exists():

+ print("Skipping:", path)

+ return

+ if not path.exists():

+ print("Making directory:", path)

+ path.mkdir(parents=True, exist_ok=True)

+ # config = args.to_generation_config()

+ prompt = create_prompt(args, engine.get_tokenizer(), problem)

+ print("========PROMPT=======")

+ print(prompt)

+ print("========PROMPT=======")

+

+ sampling_params = SamplingParams(

+ n=args.n_batches * args.n_samples_per_batch,

+ temperature=args.temperature,

+ max_tokens=args.max_length,

+ top_k=-1,

+ top_p=args.top_p,

+ stop=["```"],

+ )

+

+ # for batch_idx in range(args.n_batches):

+ # print(f"Generating batch {batch_idx} of {args.n_batches}")

+ # response = model_context.complete(

+ # config=config,

+ # prompts=[prompt] * args.n_samples_per_batch,

+ # stop_tokens=["```"] if os.getenv("STOP") is not None else None,

+ # )

+ print(f"Generating {args.n_batches * args.n_samples_per_batch} samples")

+ results = engine.generate(prompt, sampling_params)

+ assert len(results) == 1

+ print("=======RESPOSE[-1]=======")

+ # postprocess_fn: Callable[[str], str] = (

+ # (lambda x: x) if args.mode == "Insertion" else postprocess

+ # )

+ postprocess_fn = postprocess

+ print(postprocess_fn(results[0].outputs[-1].text))

+ # print("=======RESPOSE[-1]=======")

+ # print("=======RESPOSE[RAW]=======")

+ # print(response.decoded_outputs[-1])

+ # print("=======RESPOSE[RAW]=======")

+ # exit()

+ assert len(results[0].outputs) == args.n_batches * args.n_samples_per_batch

+ for idx, output in enumerate(results[0].outputs):

+ sample = output.text

+ sample = postprocess_fn(sample)

+ # global_index = batch_idx * args.n_samples_per_batch + idx

+ global_index = idx

+ output_file = path / f"{global_index}.py"

+ output_file.write_text(sample)

+ finishing_signal.touch()

+

+

+def preprocess_completion_prompt(prompt: str) -> tuple[str, str]:

+ """Preprocess the DS-1000 prompt (Completion mode) into instruction and response prefix"""

+ # hit = False

+ if not "SOLUTION START" in prompt:

+ answer_index = prompt.rindex("A:")

+ answer = prompt[answer_index + 2 :].strip()

+ instruction: str = prompt[:answer_index].strip()

+ if instruction.startswith("Problem:"):

+ instruction = instruction[len("Problem:") :].strip()

+ if "### BEGIN SOLUTION" in prompt:

+ assert prompt.count("") == 1

+ assert prompt.count("") == 0

+ lines = answer.splitlines(keepends=True)

+ return_line, result_line, begin_line = lines[-3:]

+ assert return_line.strip().startswith("# return")

+ assert result_line.strip().startswith("# ")

+ assert begin_line.strip() == "### BEGIN SOLUTION"

+ response = "".join(lines[:-3]).strip()

+ hint = begin_line.replace("###", "#").replace("BEGIN SOLUTION", "Solution")

+ response += f"\n{hint}\n"

+ else:

+ assert "BEGIN SOLUTION" in prompt

+ assert prompt.count("") == 2

+ assert prompt.count("") == 1

+ first_block_start = prompt.index("")

+ first_block_end = prompt.index("")

+ second_block_start = prompt.index("", first_block_start + 1)

+ assert first_block_end < second_block_start

+ lines = answer.splitlines(keepends=True)

+ block_end, instruction_line, begin_line, block_start = lines[-4:]

+ assert begin_line.strip() == "BEGIN SOLUTION"

+ assert block_start.strip() == ""

+ if not block_end.strip() == "":

+ if lines[-6].strip() == "":

+ response_prefix = lines[:-6]

+ starting_lines = lines[-5:-2]

+ else:

+ assert instruction_line.strip() == ""

+ response_prefix = lines[:-3]

+ starting_lines = lines[-2:-2]

+ else:

+ response_prefix = lines[:-4]

+ starting_lines = lines[-3:-2]

+ starting_lines = [f"# {line.lstrip()}" for line in starting_lines]

+ response = "".join([*response_prefix, *starting_lines]).strip()

+ response += "\n# Solution\n"

+ else:

+ # hit = True

+ assert prompt.count("") == 0

+ assert prompt.count("") == 0

+ assert prompt.strip().endswith("# SOLUTION START")

+ code_prefix = prompt[: prompt.rindex("# SOLUTION START")].strip()

+ instruction = f"""Write a solution to the following problem:

+```python

+{code_prefix}

+```"""

+ response = f"```python\n{code_prefix}\n# Solution\n"

+ instruction = instruction.replace("", "```python").replace("", "```")

+ response = response.replace("", "```python").replace("", "```")

+ # if hit:

+ # print("[Instruction]")

+ # print(instruction)

+ # print("[Response]")

+ # print(response)

+ # breakpoint()

+ return instruction, response

+

+

+def preprocess_insertion_prompt(prompt: str) -> str:

+ pattern = """

+BEGIN SOLUTION

+

+[insert]

+

+END SOLUTION"""

+ pattern_index = prompt.index(pattern)

+ # pattern_block = prompt[pattern_index:]

+ prefix = prompt[:pattern_index]

+ # hit = False

+ if pattern + "\n" in prompt:

+ index = prompt.index("", pattern_index + len(pattern))

+ suffix = prompt[index + len("") :]

+ else:

+ # hit = True

+ assert pattern in prompt

+ suffix = ""

+ final_prompt = prefix.strip() + "\n[insert]\n" + suffix.strip()

+ final_prompt = final_prompt.replace("", "```python").replace("", "```")

+ # if hit:

+ # print(final_prompt)

+ # breakpoint()

+ return final_prompt

+

+

+def main():

+ args = cast(Args, HfArgumentParser(Args).parse_args_into_dataclasses()[0])

+ dataset = DS1000Dataset(args.dataset_path, mode=args.mode)

+

+ global PROMPT

+ if (inferred := os.getenv("INFER")) is not None:

+ if inferred == "1":

+ PROMPT = infer_prompt_template(args.model_name_or_path)

+ else:

+ PROMPT = infer_prompt_template(inferred)

+

+ print("Using prompt:")

+ print(PROMPT)

+

+ all_problems = [

+ problem

+ for problems in dataset.data.values()

+ for problem in problems

+ if args.mode == "Completion" or problem["lib"] != "Matplotlib"

+ ]

+ engine = LLM(

+ tokenizer=args.model_key, model=args.model_name_or_path or args.model_key

+ )

+ # model_context = get_model_context(

+ # model_key=args.model_key,

+ # model_name_or_path=args.model_name_or_path,

+ # )

+ for problem in tqdm(all_problems):

+ # generate(args, model_context, problem)

+ generate(args, engine, problem)

+

+

+if __name__ == "__main__":

+ main()

diff --git a/evaluation/text2code.py b/evaluation/text2code.py

index 58dd789..4f98159 100644

--- a/evaluation/text2code.py

+++ b/evaluation/text2code.py

@@ -1,3 +1,15 @@

+import warnings

+

+if __name__ == "__main__":

+ # Deprecate warning

+ warnings.warn(

+ "This module is deprecated. Use `evaluation.text2code_vllm` instead.",

+ DeprecationWarning,

+ )

+ # Press y to continue

+ if input("Do you want to continue? [y/N]: ").lower() != "y":

+ exit()

+

import itertools

from dataclasses import dataclass

from pathlib import Path

@@ -10,6 +22,7 @@

from star_align.prompt_template import SC2_INSTRUCT_PROMPT as PROMPT_TEMPLATE

from star_align.utils import chunked

+

class Text2CodeProblem(TypedDict):

id: str

instruction: str

@@ -25,6 +38,7 @@ def get_humaneval_raw_problems() -> list[dict]:

problems = get_human_eval_plus()

return list(problems.values())

+

def map_mbpp_problem(p: dict) -> Text2CodeProblem:

id = p["task_id"]

prompt = p["prompt"]

diff --git a/evaluation/text2code_vllm.py b/evaluation/text2code_vllm.py

new file mode 100644

index 0000000..cb9375e

--- /dev/null

+++ b/evaluation/text2code_vllm.py

@@ -0,0 +1,215 @@

+import os

+from dataclasses import dataclass, field

+from pathlib import Path

+from typing import Literal, TypedDict, cast

+from evalplus.data import get_human_eval_plus, get_mbpp_plus, write_jsonl

+

+from evoeval.data import get_evo_eval

+from transformers import HfArgumentParser

+

+from star_align.utils import infer_prompt_template, is_base_model

+

+from vllm import LLM, SamplingParams

+

+

+class Text2CodeProblem(TypedDict):

+ id: str

+ prompt: str

+ instruction: str

+ response_prefix: str

+

+

+# MBPP_INSTRUCTION = """{nl_description} Your code should satisfy the following assertion:

+# ```python

+# {assertions}

+# ```

+# Enclose your solution in ```python and ```"""

+

+

+def get_mbpp_raw_problems() -> list[dict]:

+ problems = get_mbpp_plus()

+ return list(problems.values())

+

+

+def get_humaneval_raw_problems() -> list[dict]:

+ problems = get_human_eval_plus()

+ return list(problems.values())

+

+

+def get_evoeval_raw_problems(dataset: str):

+ def get_raw_problems() -> list[dict]:

+ problems = get_evo_eval(dataset)

+ return list(problems.values())

+

+ return get_raw_problems

+

+

+def map_mbpp_problem(p: dict) -> Text2CodeProblem:

+ id = p["task_id"]

+ prompt = p["prompt"]

+ start_index = prompt.index('"""')

+ end_index = prompt.rindex('"""')

+ prompt = prompt[start_index + 3 : end_index]

+ assert_index = prompt.index("assert")

+ instruction = prompt[:assert_index].strip()

+ if not instruction.endswith("."):

+ instruction += "."

+ assertion = prompt[assert_index:].strip()

+ instruction = f"""{instruction}

+

+```python

+{assertion}

+```"""

+ prefix = ""

+ response_prefix = f"""{prefix}```python"""

+ return Text2CodeProblem(

+ id=str(id),

+ prompt=prompt,

+ instruction=instruction,

+ response_prefix=response_prefix,

+ )

+

+

+def map_humaneval_problem(p: dict) -> Text2CodeProblem:

+ id = p["task_id"]

+ prompt = p["prompt"]

+ prompt = prompt.strip()

+ # try:

+ # docstring_index = prompt.index('"""')

+ # except ValueError:

+ # docstring_index = prompt.index("'''")

+ # signature = prompt[:docstring_index].strip()

+ # Instruction

+ # instruction = f"""Complete the implementation of the following function:

+ prompt_header = os.getenv(

+ "PROMPT_HEADER", "Write a Python function to solve the following task:"

+ )

+ instruction = f"""{prompt_header}

+```python

+{prompt}

+```"""

+ prefix = ""

+ prefix_template = os.getenv("PREFIX_TEMPLATE", "```python")

+ response_prefix = prefix + (

+ prefix_template.replace("{prompt}", prompt)

+ if "{prompt}" in prefix_template

+ else prefix_template

+ )

+ # response_prefix = f"""{prefix}```python

+ # {prompt}"""

+ return Text2CodeProblem(

+ id=id,

+ prompt=prompt,

+ instruction=instruction,

+ response_prefix=response_prefix,

+ )

+

+

+@dataclass(frozen=True)

+class Args:

+ model_key: str

+ dataset: Literal[

+ "humaneval",

+ "mbpp",

+ "EvoEval_difficult",

+ "EvoEval_creative",

+ "EvoEval_subtle",

+ "EvoEval_combine",

+ "EvoEval_tool_use",

+ "EvoEval_verbose",

+ "EvoEval_concise",

+ ]

+ save_path: str

+ n_samples_per_problem: int = field(default=1)

+ max_new_tokens: int = field(default=1024)

+ top_p: float = field(default=1.0)

+ temperature: float = field(default=0.0)

+ model_name_or_path: str | None = None

+

+

+def main():

+ args = cast(Args, HfArgumentParser(Args).parse_args_into_dataclasses()[0])

+ raw_problem_fn, map_problem_fn = (

+ (get_evoeval_raw_problems(args.dataset), map_humaneval_problem)

+ if args.dataset.startswith("EvoEval_")

+ else (

+ (get_humaneval_raw_problems, map_humaneval_problem)

+ if args.dataset == "humaneval"

+ else (get_mbpp_raw_problems, map_mbpp_problem)

+ )

+ )

+ raw_problems = raw_problem_fn()

+ problems = list(map(map_problem_fn, raw_problems))

+

+ engine = LLM(

+ tokenizer=args.model_key, model=args.model_name_or_path or args.model_key

+ )

+

+ base_model_prompt = is_base_model(args.model_key)

+

+ stop: str | list[str] = (

+ "\n```\n"

+ if not base_model_prompt

+ else ["\ndef ", "\nclass ", "\nimport ", "\nfrom ", "\nassert ", "\n# "]

+ )

+ sampling_params = SamplingParams(

+ n=args.n_samples_per_problem,

+ temperature=args.temperature,

+ max_tokens=args.max_new_tokens,

+ top_k=-1,

+ top_p=args.top_p,

+ stop=stop,

+ )

+

+ if base_model_prompt:

+ print("Base model")

+ else:

+ prompt_template = infer_prompt_template(

+ os.getenv("TOKENIZER") or args.model_name_or_path or args.model_key

+ )

+ # prompt_template = PROMPT_TEMPLATE

+ print("Using:", prompt_template)

+

+ prompts: list[str] = []

+ for problem in problems:

+ if not base_model_prompt:

+ prompt = prompt_template.format(

+ instruction=problem["instruction"], response=problem["response_prefix"]

+ )

+ else:

+ prompt = problem["prompt"]

+ prompts.append(prompt)

+

+ results = engine.generate(prompts, sampling_params)

+ Path(args.save_path).write_text("")

+

+ step = 20

+ print_or_not = [idx == 0 or idx % step == 0 for idx in range(len(problems))]

+

+ def sanitize(output: str) -> str:

+ if not base_model_prompt:

+ return output.split("```python")[-1].split("```")[0]

+ for s in stop:

+ output = output.rsplit(s, 1)[0]

+ return output

+

+ for problem, prompt, result, print_debug in zip(

+ problems, prompts, results, print_or_not

+ ):

+ if print_debug:

+ print("[Example Prompt]")

+ print(prompt)

+ print("[Example Completion]")

+ print(result.outputs[0].text)

+ samples = [

+ dict(

+ task_id=problem["id"],

+ completion=sanitize(output.text),

+ )

+ for output in result.outputs

+ ]

+ write_jsonl(args.save_path, samples, append=True)

+

+

+if __name__ == "__main__":

+ main()

diff --git a/prompts/self-ossinstruct-fewshot.txt b/prompts/self-ossinstruct-fewshot.txt

index a709231..8c73095 100644

--- a/prompts/self-ossinstruct-fewshot.txt

+++ b/prompts/self-ossinstruct-fewshot.txt

@@ -1,15 +1,21 @@

### System: I->R

-Provide the best response to a given instruction. Follow the following steps to craft your response:

-1. reason about the given instruction

-2. provide a high-quality solution

-3. offer a concise explanation

-4. write assertions and **invoke them** to verify the correctness your solution

+You are an extremely intelligent AI coding assistant. Please provide an accurate and reliable response to each user instruction. After delivering your response, verify its consistency and correctness by writing a series of executable tests.

### System: C->I

-Create a set of independent code instructions that are original, different, diverse, and high-quality, where the properties control an instruction's category, language, concepts, and difficulty.

+Create a series of independent coding tasks that are original, distinct, diverse, and high-quality, fostering logical thinking. Each task must adhere to specified properties:

+

+- category: the type of task (e.g., function implementation, class implementation, or program implementation)

+- language: the programming language to be used

+- difficulty: the complexity level of the task (e.g., easy, medium, or hard)

+- concepts: fundamental principles and techniques the task is designed to incorporate, which developers must understand to effectively solve the task

+

+Design the tasks so that the relevant concepts emerge naturally as the most appropriate solutions, without explicitly mentioning that a particular concept should be used.

### System: S->C

-Extract key programming concepts from a given code snippet collected from the open source repositories. Present the concepts as a comma separated list.

+Extract key programming concepts from the provided code snippet. Programming concepts refer to the foundational principles and techniques used in programming, which are crucial for developers to master. List these concepts in a comma-separated format.

+

+### System: S->I

+Gain inspiration from the given code snippets and create a series of independent coding tasks that are original, distinct, diverse, and high-quality, fostering logical thinking.

### Example 1

[Code]

@@ -25,38 +31,15 @@ def _split_into_chunks(value):

value >>= 5

[Property]

-category: code generation (function implementation)

+category: function implementation

language: Python

-concepts: 5-bit chunk encoding with bitwise shifts, ASCII value manipulation, continuation bit signaling

difficulty: medium

+concepts: 5-bit chunk encoding with bitwise shifts, ASCII value manipulation, continuation bit signaling

[Instruction]

Create a Python function to encode an integer into a sequence of encoded characters, where each character represents a 5-bit chunk of the integer. The ASCII value of each chunk character is formed by adding 63 to the 5-bit chunk's value. For all chunks except the last one, set the 6th bit to 1 to indicate continuation. Employ bitwise operations for this encoding process.

-[Solution]

-```python

-def encode_integer_to_chars(value: int) -> str:

- if value == 0:

- return chr(63)

- encoded_chars = []

- while value > 0:

- chunk = (value & 31) + 63

- value >>= 5

- if value > 0:

- chunk |= 32

- encoded_chars.append(chr(chunk))

- return ''.join(reversed(encoded_chars))

-

-# Tests

-assert encode_integer_to_chars(0) == chr(63)

-assert encode_integer_to_chars(1) == chr(63 + 1)

-assert encode_integer_to_chars(31) == chr(63 + 31)

-assert encode_integer_to_chars(int(0b1)) == chr(0b1 + 63)

-assert encode_integer_to_chars(int(0b1000001)) == chr(0b10 + 63) + chr(63 + 0b100001)

-```

-

[Response]

-[Reasoning]

To encode an integer into a sequence of encoded characters based on the specified criteria, let's follow these steps:

1. Break down the integer into 5-bit chunks: Extract 5-bit chunks from the integer using using `& 31` (`0x1F`), because the binary representation of `31` (`11111`) has the last five bits set, which will effectively extract the 5 bits we are interested in. We start from the least significant bits (LSB).

@@ -65,37 +48,28 @@ To encode an integer into a sequence of encoded characters based on the specifie

4. Convert to characters: Use `ord` and `chr` to convert each chunk (now effectively 6 bits due to the continuation bit) into a character by adding 63 to its value. This ensures that the character is within a printable ASCII range.

5. Assembly: Assemble these characters into the final encoded string while maintaining the order of the chunks through `reversed`.

-[Implementation]

Here's how you can implement this function:

```python

def encode_integer_to_chars(value: int) -> str:

- # Base case for 0

if value == 0:

return chr(63)

encoded_chars = []

while value > 0:

- # Extract the 5-bit chunk and add 63 to its value

chunk = (value & 31) + 63

value >>= 5

- # If there's still more of the value left, set the continuation bit

if value > 0:

chunk |= 32

encoded_chars.append(chr(chunk))

- # Reverse the list of characters to start with the least significant chunk

- # and join them into a single string

return ''.join(reversed(encoded_chars))

```

-[Explanation]

-This function processes the integer bit by bit in 5-bit chunks, applying bitwise operations to extract and manipulate these chunks, then converts each chunk into a character using the specified encoding scheme. This approach efficiently handles the encoding in a loop until all bits of the integer have been processed.

-

-Note that this function is designed for non-negative integers and does not support negative integers by default because negative numbers are represented in two's complement form, where the most significant bit (MSB) indicates the sign. In Python, right-shifting (`>>`) a negative integer preserves the sign bit, effectively making the never executed due to the `value > 0` condition. If you change it to `value != 0`, it will then lead to an infinite loop if the same encoding logic is applied to negative integers without modifications.

+Keep in mind that this function is designed for non-negative integers. In Python, right-shifting (`>>`) a negative integer preserves the sign bit, which could lead to infinite loops or other incorrect behaviors if the same encoding approach is applied to negative integers without appropriate adjustments.

[Tests]

-You can validate this function with a few test cases to ensure correctness:

+You can validate this function with the following test cases that cover scenarios from zero to a number requiring more than one chunk, ensuring the function operates as expected:

```python

assert encode_integer_to_chars(0) == chr(63)

@@ -105,8 +79,6 @@ assert encode_integer_to_chars(int(0b1)) == chr(0b1 + 63)

assert encode_integer_to_chars(int(0b1000001)) == chr(0b10 + 63) + chr(63 + 0b100001)

```

-These tests cover scenarios from zero to a number requiring more than one chunk, ensuring the function operates as expected.

-

### Example 2

[Code]

def ceil_shift(n, b):

@@ -123,37 +95,15 @@ def ceil_shift(n, b):

else:

[Property]

-category: code generation (function implementation)

+category: function implementation

language: Python

-concepts: bitwise operation for division, ceiling without division or float, input validation with assertions, non-negative integer checks

difficulty: medium

+concepts: bitwise operation for division, ceiling without division or float, input validation with assertions, non-negative integer checks

[Instruction]

Develop a Python function `ceil_divide_without_div` that computes the ceiling of `n / 2**b` without using division, floating-point operations, built-in functions like `math.ceil`, or converting it into computing the floor. Instead, use bit manipulation to achieve the result. You write assertions to ensure both `n` and `b` are non-negative integers.

-[Solution]

-```python

-def ceil_divide_without_div(n: int, b: int) -> int:

- assert isinstance(n, int) and n >= 0, "n must be a non-negative integer"

- assert isinstance(b, int) and b >= 0, "b must be a non-negative integer"

- mask = (1 << b) - 1

- divisible = (n & mask) == 0

- result = n >> b

- if not divisible:

- result += 1

- return result

-

-# Tests

-import math

-assert ceil_divide_without_div(10, 3) == math.ceil(10 / 2**3)

-assert ceil_divide_without_div(17, 4) == math.ceil(17 / 2**4)

-assert ceil_divide_without_div(100, 5) == math.ceil(100 / 2**5)

-assert ceil_divide_without_div(0, 3) == math.ceil(0 / 2**3)

-assert ceil_divide_without_div(1, 0) == math.ceil(1 / 2**0)

-```

-

[Response]

-[Reasoning]

We can leverage the properties of bitwise operations to develop a Python function that computes the ceiling of `n / 2**b` without using division, floating-point operations, or any built-in functions like `math.ceil`, and strictly avoiding converting the problem into a floor computation.

In the context, dividing by `2**b` is equivalent to right-shifting `n` by `b` bits in binary representation when `n` is divisible by `2**b`. Otherwise, this approach inherently computes the floor of the division. To adjust for the ceiling without directly calculating it, we can consider two cases:

@@ -165,7 +115,6 @@ To determine the divisibility of `n` by `2**b`, we can check if the first `b` bi

We'll also include assertions to ensure that `n` and `b` are non-negative integers.

-[Implementation]

Here is the implementation of `ceil_divide_without_div`:

```python

@@ -173,24 +122,18 @@ def ceil_divide_without_div(n: int, b: int) -> int:

assert isinstance(n, int) and n >= 0, "n must be a non-negative integer"

assert isinstance(b, int) and b >= 0, "b must be a non-negative integer"

- # Constructing the mask to check divisibility

mask = (1 << b) - 1

divisible = (n & mask) == 0

-

result = n >> b

-

- # If n is not divisible by 2**b, increment the result to get the ceiling

if not divisible:

result += 1

-

return result

```

-[Explanation]

This function calculates the ceiling of `n / 2**b` purely through bitwise manipulation and integer arithmetic. The assertions ensure the input constraints are met, as specified.

[Tests]

-To verify the correctness of the function, we can run the following tests:

+To verify the correctness of the function, we can compare the results of our function with the expected results obtained using `math.ceil` to ensure the correctness of the ceiling division operation:

```python

import math

@@ -202,8 +145,6 @@ assert ceil_divide_without_div(0, 3) == math.ceil(0 / 2**3)

assert ceil_divide_without_div(1, 0) == math.ceil(1 / 2**0)

```

-These tests compare the results of our function with the expected results obtained using `math.ceil` to ensure the correctness of the ceiling division operation.

-

### Example 3

[Code]

isprime = n >= 2 and 1 or 0

@@ -221,54 +162,15 @@ def prime(x):

lastn = lastn + 1 # Check the next number

[Property]

-category: code generation (class implementation)

+category: class implementation

language: Python

-concepts: prime number memoization, n-th prime calculation, efficiency in prime checks

difficulty: medium

+concepts: prime number memoization, n-th prime calculation, efficiency in prime checks

[Instruction]

Implement a Python class with a method `find_nth_prime_memoized(n)` that returns the nth prime number, where n is a positive integer. Utilize memoization to store already found prime numbers in a list within the class to avoid recalculating primes for multiple method calls. Ensure the method efficiently updates and references this list to find new primes as needed. Additionally, enhance performance by avoiding unnecessary prime checks.

-[Solution]

-```python

-class PrimeFinder:

- def __init__(self):

- self.primes = [2, 3]

-

- def is_prime(self, num: int) -> bool:

- if num < 2:

- return False

- for prime in self.primes:

- if prime * prime > num:

- break

- if num % prime == 0:

- return False

- return True

-

- def find_nth_prime_memoized(self, n: int) -> int:

- if n <= len(self.primes):

- return self.primes[n - 1]

-

- current_num = self.primes[-1] + 2

- while len(self.primes) < n:

- if self.is_prime(current_num):

- self.primes.append(current_num)

- current_num += 2

-

- return self.primes[n - 1]

-

-# Tests

-first_few_primes = [2, 3, 5, 7, 11, 13, 17, 19, 23, 29]

-prime_finder = PrimeFinder()

-for i, prime in enumerate(first_few_primes, 1):

- assert prime_finder.find_nth_prime_memoized(i) == prime

-# Cached result

-for i, prime in enumerate(first_few_primes, 1):

- assert prime_finder.find_nth_prime_memoized(i) == prime

-```

-

[Response]

-[Reasoning]

Implementing the required Python class with the method `find_nth_prime_memoized(n)` involves several key steps:

1. The class should have a constructor `__init__` that initializes the cache list to store the memoized prime numbers. Initially, the list can be seeded with the first two prime numbers, 2 and 3.

@@ -278,7 +180,6 @@ Implementing the required Python class with the method `find_nth_prime_memoized(

- It only checks up to the square root of the number to be tested, implemented by comparing the square of the prime with the number.

- It skips even numbers by incrementing by 2 for each prime check. This is sound since the cache is initialized with the first **two** primes, 2 and 3.

-[Implementation]

Here is how you could implement such a class:

```python

@@ -297,42 +198,31 @@ class PrimeFinder:

return True

def find_nth_prime_memoized(self, n: int) -> int:

- # Return the n-th prime directly if already in cache

if n <= len(self.primes):

return self.primes[n - 1]

- # Start checking from the number after the last prime in cache

current_num = self.primes[-1] + 2

while len(self.primes) < n:

if self.is_prime(current_num):

- # Cache the new prime

self.primes.append(current_num)

- # Skip even numbers

current_num += 2

return self.primes[n - 1]

```

-[Explanation]

-This class `PrimeFinder` initializes with a cache of the first two primes. The `is_prime` method checks if a number is prime by dividing it with cached primes up to its square root. The `find_nth_prime_memoized` method uses this helper method to find and cache primes up to the requested `n`th one. Subsequent calls leverage cached primes for efficiency.

-

[Tests]

-Here's how you can test this class:

+Here's how you can test this class by verifying that the class correctly finds the first few primes and that subsequent calls return the memoized results directly:

```python

-# Test that the class correctly finds the first few primes

first_few_primes = [2, 3, 5, 7, 11, 13, 17, 19, 23, 29]

prime_finder = PrimeFinder()

for i, prime in enumerate(first_few_primes, 1):

assert prime_finder.find_nth_prime_memoized(i) == prime

-# Test that the memoized results are returned directly

for i, prime in enumerate(first_few_primes, 1):

assert prime_finder.find_nth_prime_memoized(i) == prime

```

-These tests verify that the class correctly finds the first few primes and that subsequent calls return the memoized results directly.

-

### Example 4

[Code]

return (a + 1) * (b + 1) - 1

@@ -352,10 +242,10 @@ def multiset_powerset(multiset):

while i >= 0 and not changed:

[Property]

-category: code generation (function implementation)

+category: function implementation

language: Python

-concepts: special formula implementation, odd and even number handling, function composition

difficulty: easy

+concepts: special formula implementation, odd and even number handling, function composition

[Instruction]

Here are two special formulas:

@@ -373,31 +263,9 @@ $$

Write a Python function to return $f_2(f_1(a, b))$ for given `a` and `b`.

-[Solution]

-```python

-def f1(a, b):

- return (a + 1) * (b + 1) - 1

-

-def f2(k):

- if k % 2 == 0:

- return (k**2) / 2 + 2 * k + 1

- else:

- return ((k + 1)**2) / 2 + k + 1

-

-def f1_f2_combined(a, b):

- return f2(f1(a, b))

-

-# Tests

-assert f1_f2_combined(2, 3) == f2(f1(2, 3))

-assert f1_f2_combined(4, 5) == f2(f1(4, 5))

-assert f1_f2_combined(6, 7) == f2(f1(6, 7))

-```

-

[Response]

-[Reasoning]

Based on the formulas you provided, we can define two Python functions, `f1(a, b)` and `f2(k)`, respectively, and then combine them to calculate $f2(f1(a, b))$ for given `a` and `b`.

-[Implementation]

Here is the implementation:

```python

@@ -405,17 +273,17 @@ def f1(a, b):

return (a + 1) * (b + 1) - 1

def f2(k):

- if k % 2 == 0: # Check if k is even

+ if k % 2 == 0:

return (k**2) / 2 + 2 * k + 1

- else: # k is odd

+ else:

return ((k + 1)**2) / 2 + k + 1

def f1_f2_combined(a, b):

return f2(f1(a, b))

```

-[Explanation]

In this implementation:

+

- `f1(a, b)` calculates the first formula you've given.

- `f2(k)` calculates the second formula, with a conditional branch for whether `k` is even or odd.

- `f1_f2_combined(a, b)` uses the output of `f1(a, b)` as the input for `f2(k)` and returns the result.

@@ -447,44 +315,19 @@ def tripletsSorting(nums, t):

for i in range(len(nums)):

[Property]

-category: code generation (function implementation)

+category: function implementation

language: Python

-concepts: insertion point for a sorted array, optimized time complexity

difficulty: easy

+concepts: insertion point for a sorted array, optimized time complexity

[Instruction]

Design a Python function that takes a sorted array and a target value, and return a valid index where target can be inserted to maintain the array's sorted order. Optimize the function to run in logarithmic time complexity.

For example, given `array = [1, 3, 5, 5, 6]` and `target = 5`, the function should return either 2 or 3 because 5 presents at both indices 2 and 3.

-[Solution]

-```python

-from typing import List

-

-def search_insert_position(nums: List[int], target: int) -> int:

- left, right = 0, len(nums) - 1

-

- while left <= right:

- mid = (left + right) // 2

- if nums[mid] == target:

- return mid

- elif nums[mid] < target:

- left = mid + 1

- else:

- right = mid - 1

- return left

-

-# Tests

-assert search_insert_position([1, 3, 5, 5, 6], 5) in [2, 3]

-assert search_insert_position([1, 3, 5, 5, 6], 2) == 1

-assert search_insert_position([1, 3, 5, 5, 6], 7) == 5

-```

-

[Response]

-[Reasoning]

To solve this problem efficiently and ensure logarithmic time complexity, we can use a binary search algorithm. Compared with a standard binary search that looks for an exact match, we can modify the algorithm such that when the target is not found, we return the `left` bound, which represents the index where the target can be inserted to maintain the array's sorted order. Since any valid index for insertion is acceptable, we can direclty return the index if there is an exact match.

-[Implementation]

Here is a Python function that implements this approach:

```python

@@ -497,1137 +340,1277 @@ def search_insert_position(nums: List[int], target: int) -> int:

as `target` can be inserted at index 2 or 3 to maintain the sorted order.

"""

left, right = 0, len(nums) - 1

-

while left <= right:

mid = (left + right) // 2

-

- # Directly return the index if there's an exact match

if nums[mid] == target:

return mid

elif nums[mid] < target:

left = mid + 1

else:

right = mid - 1

-

- # At this point, `left` is the correct insertion index

return left

```

-[Explanation]

This implementation ensures that when `target` is not found, `left` represents the correct insertion index. This is because at the end of the loop, all elements to the left of `left` are smaller than `target`, all elements to the right of `left` are equal to or larger than `target`, and `left > right`. Therefore, `left` is the correct index for insertion to maintain the sorted order.

[Tests]

-To test this function, you can use the example you provided:

+You can test the function with scenarios where the target is found at multiple indices and where the target is not present in the array:

```python

-# Provided example

assert search_insert_position([1, 3, 5, 5, 6], 5) in [2, 3]

-# Additional cases

assert search_insert_position([1, 3, 5, 5, 6], 2) == 1

assert search_insert_position([1, 3, 5, 5, 6], 7) == 5

+assert search_insert_position([1, 3, 5, 5, 6], 0) == 0

```

-These tests cover the scenario where the target is found at multiple indices, as well as cases where the target is not present in the array but needs to be inserted at the correct position to maintain the sorted order.

-

### Example 6

[Code]

-files = ['kitti_all_train.data',

- 'kitti_all_train.labels',

- 'kitti_all_test.data',

- 'kitti_all_test.labels']

-

-for file in files:

- if file not in os.listdir(data_dir):

- zip_path = os.path.join(data_dir, 'kitti_features.zip')

- target_path = os.path.dirname(zip_path)

- print("Extracting {} to {}...".format(zip_path, target_path))

- with zipfile.ZipFile(zip_path, "r") as zip_ref:

- zip_ref.extractall(target_path)

- print("Done.")

- break

-

-X_train = np.loadtxt(os.path.join(data_dir, files[0]), np.float64, skiprows=1)

-y_train = np.loadtxt(os.path.join(data_dir, files[1]), np.int32, skiprows=1)

-X_test = np.loadtxt(os.path.join(data_dir, files[2]), np.float64, skiprows=1)

+def decompress(self):

+ source = self.compressed

+ if isinstance(source, (bytes, bytearray)):

+ return self.decompress_bytes()

+ pos = 0

+ node = self.root

+ res = bytearray()

+

+ while pos < len(source):

+ code = int(source[pos])

+ child = node.children[code]

+ if child.is_leaf:

+ res.append(child)

+ node = self.root

+ else:

+ node = child

+ pos += 1

+

+ return bytes(res)

[Property]

-category: code generation (script implementation)

+category: function implementation

language: Python

-concepts: file I/O, data loading and processing with NumPy, zip file handling

difficulty: medium

+concepts: decoding compressed data, binary tree traversal, byte array processing

[Instruction]

-I have a zip archive `kitti_features.zip` with four files (no top-level directory): `kitti_all_train.data`, `kitti_all_train.labels`, `kitti_all_test.data`, and `kitti_all_test.labels`. I want to load these files into numpy arrays for a machine learning project. The `.data` files are floating points and the `.labels` files are integers, both with a header row.

+Write a Python function `huffman_decompress` that takes two arguments: `compressed_data`, a byte array of 0/1 sequence representing Huffman compressed data, and `huffman_tree`, a Huffman tree that stores the actual data (each leaf is associated with a character). The function should return the original uncompressed data as `bytes`. Define the `HuffmanNode` class yourself as a general tree structure with a `children` list.

-Can you write an initial Python script that extract the zip archive (if not already extracted) and loads the training and testing datasets + labels into appropriate numpy variables.

+[Response]

+To accomplish the Huffman decompression, we first need to define the `HuffmanNode` class, which, as required, should be a general tree structure with a `children` list and an optional `value` attribute to store the character associated with a leaf node.

-[Solution]

+Then, we'll implement the `huffman_decompress` function. This function will traverse the Huffman tree according to the bits in `compressed_data`. When it encounters a leaf node, the associated character will be appended to the result, and the traversal restarts from the root of the tree. This process continues until the entire `compressed_data` is processed.

-[Response]

-To achieve this, you'll need to use `zipfile` to extract the files from the zip archive and `numpy` to load the data into arrays. Since the `.data` files contain `float64` numbers and the `.labels` files contain `int32` integers, both with a header row, to process them appropriately, you can use `np.loadtxt`, specifying data types as `np.float64` for `.data` files and `np.int32` for `.labels` files, while also including `skiprows=1` to bypass the header. Additionally, given that the zip archive doesn't have a top-level directory, the files will be extracted directly into the target directory.

+You said that `compressed_data` is a byte array of 0/1 sequence, so I assume that each byte, not each bit, represents a step in the Huffman tree. In practice, it is more efficient

-Here's a Python script that extracts the files if they haven't been extracted yet and loads the data into numpy arrays:

+Here's how we can implement this:

```python

-import os

-import numpy as np

-import zipfile

+class HuffmanNode:

+ def __init__(self, value=None):

+ self.value = value

+ self.children = []

-def extract_files(zip_path, file_paths, extract_to='.'):

- """Extracts files from a zip archive if they don't already exist."""

- all_files_extracted = all(os.path.exists(file_path) for file_path in file_paths)

- if not all_files_extracted:

- with zipfile.ZipFile(zip_path, 'r') as zip_ref:

- zip_ref.extractall(extract_to)

+def huffman_decompress(compressed_data, huffman_tree):

+ result = []

+ node = huffman_tree

-def load_data(file_name, dtype):

- """Loads data from a file into a numpy array, skipping the header row."""

- return np.loadtxt(file_name, dtype=dtype, skiprows=1)

+ # Each byte represents a bit in `compressed_data`

+ for bit in compressed_data:

+ if len(node.children) > 0:

+ node = node.children[int(bit)]

+ if len(node.children) == 0:

+ result.append(node.value)

+ node = huffman_tree

-def main():

- zip_path = 'kitti_features.zip'

- data_files = {

- 'train_data': 'kitti_all_train.data',

- 'train_labels': 'kitti_all_train.labels',

- 'test_data': 'kitti_all_test.data',

- 'test_labels': 'kitti_all_test.labels'

- }

- extract_files(zip_path, data_files.values())

+ return "".join(result).encode()

+```

- # Load the datasets

- X_train = load_data(data_files['train_data'], np.float64)

- y_train = load_data(data_files['train_labels'], np.int32)

- X_test = load_data(data_files['test_data'], np.float64)

- y_test = load_data(data_files['test_labels'], np.int32)

+In this implementation, each byte in `compressed_data` represents a bit, guiding the traversal of the Huffman tree. Characters are appended upon reaching leaf nodes and the result is then encoded to `bytes`.

- # You now have the datasets and labels loaded into numpy arrays

- print(f"X_train shape: {X_train.shape}, y_train shape: {y_train.shape}")

- print(f"X_test shape: {X_test.shape}, y_test shape: {y_test.shape}")

+[Tests]

+You can test this function with a sample Huffman tree and compressed data to verify that the decompression works correctly:

-if __name__ == "__main__":

- main()

+```python

+root = HuffmanNode()

+root.children = [HuffmanNode('A'), HuffmanNode('B')]

+root.children[0].children = [HuffmanNode('C'), HuffmanNode('D')]

+root.children[1].children = [HuffmanNode('E'), HuffmanNode('F')]

+compressed_data = bytearray([0, 1, 0, 0, 1, 1])

+# 01 -> D, 00 -> C, 11 -> F

+assert huffman_decompress(compressed_data, root) == b'DCF'

```

-This script defines separate functions for data extraction and loading. In the `main` function, it first checks if the data and label files are already extracted. If not, it extracts them from the specified zip archive. Then, it loads the data into `numpy` arrays, ensuring the desired data types and to skip the header row as you requested. Remember to have `numpy` installed in your environment, and ensure that the zip file is in the correct location relative to the script.

-

### Example 7

[Code]

-template >

-class Stack

-{

-public:

- Stack();

-

- Stack(std::initializer_list);

-

- const bool empty() const;

-

- const size_t size() const;

-

- void push(constT&);

-

- void pop();

-

+def format_size(num):

+ """http://stackoverflow.com/a/1094933

+ """

+ for x in ['bytes', 'KB', 'MB', 'GB']:

+ if num < 1024.0 and num > -1024.0:

+ return "%3.1f%s" % (num, x)

+ num /= 1024.0

+ return "%3.1f%s" % (num, 'TB')

+assert format_size(1024**2 - 1) == '1024.0KB'

+assert format_size(1024*512) == '512.0KB'

[Property]

-category: code generation (class implementation)

-language: C++

-concepts: template-based generic stack implementation, STL container usage

-difficulty: medium

+category: function implementation

+language: Python

+difficulty: easy

+concepts: arithmetic operations for size conversion, rounding numbers, dynamic unit selection, string interpolation

[Instruction]

-Implement a generic stack class in C++ that allows the user to create a stack for any data type. The class should be template-based, enabling type flexibility. Your implementation should support basic stack operations such as `push`, `pop`, `top`, and checking if the stack is `empty`. Additionally, include a method to retrieve the current size of the stack. For the underlying container, use the Standard Template Library's `std::vector` as the default storage mechanism. This will provide a dynamic array that can efficiently resize itself as needed.

-

-[Solution]

+Create a Python function to format a file size in bytes into a human-readable string representation, using 'bytes', 'KB' (kilobytes), 'MB' (megabytes), 'GB' (gigabytes), or 'TB' (terabytes) units. The output should be rounded to one decimal place and follow the format: "{X.Y}{Unit}", where "Unit" is the appropriate size unit and "X.Y" is the rounded size. For example, `format_size(1024 * 512)` should return `'512.0KB'`, and `format_size(1024**2 - 1)` should return `'1024.0KB'`.

[Response]